Fine-Tuning Generative AI for Pharma: Why Generic Models Aren’t Enough

Oct 09, 2025 | 6 min read

Generative AI is changing how pharmaceutical companies create, manage, and review content. But while public tools like ChatGPT or Gemini are impressive, they weren’t built for the strict, regulated world of pharma.

In an industry where every word must be backed by data and every claim must meet compliance standards, using a generic AI model can create risk instead of efficiency. That’s why leading pharma teams are now fine-tuning generative AI models—training them on approved internal data, product libraries, and regulatory frameworks to make them safer, smarter, and compliant.

Why Generic Models Fall Short

Generic AI models aren’t trained for life sciences, and that can lead to dangerous mistakes.

Large language models (LLMs) like ChatGPT are trained on vast public data sets. They’re powerful generalists, but they lack the precision pharma requires.

According to a recent Nature Medicine study, general-purpose models often “hallucinate” medical facts up to 27% of the time, especially when asked clinical or regulatory questions (Nature Medicine, 2024).

Here’s why this matters for pharma:

- No built-in compliance context: Generic AI doesn’t know which drug claims are approved or which words trigger off-label concerns.

- Data security risks: Public models may log, reuse, or expose confidential data.

- Inconsistent tone: Each pharma company has unique brand and regulatory phrasing that a public model can’t replicate.

- No traceability: Outputs lack the audit trails required by FDA 21 CFR Part 11 and EMA compliance guidelines.

Simply put, general AI may write faster—but not safer.

What Fine-Tuning Actually Means

Fine-tuning teaches an AI model your organization’s specific rules, data, and tone.

Instead of relying on generic knowledge, fine-tuning uses approved, in-house data to retrain the model for accuracy and compliance.

- Train with approved materials: MLR-approved claims, labeling, and product monographs become the model’s foundation.

- Add compliance metadata: Each dataset is tagged with approval sources, product versions, and risk classifications.

- Validate with SMEs: Subject matter experts review sample outputs to confirm accuracy and compliance.

- Retrain regularly: The model learns from new approved content, so it evolves as regulations or brand guidelines change.

McKinsey research shows that domain-tuned AI models can deliver up to 60% higher task accuracy and 40% faster time-to-approval compared to untuned systems (McKinsey & Company, 2024).

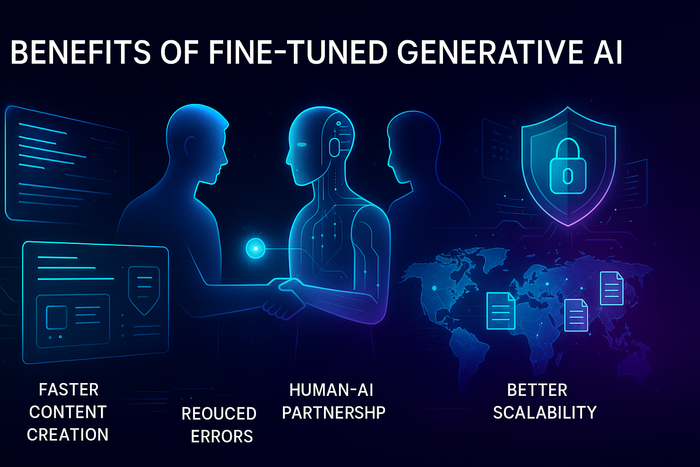

Benefits of Fine-Tuned Generative AI

When AI understands your data, it becomes a compliance ally—not a liability.

- Faster content creation: First drafts align with MLR and require fewer rounds of review.

- Reduced errors: The AI avoids unapproved phrasing or claims.

- Protected data: Private cloud or on-prem models ensure full control over sensitive information.

- Better scalability: Teams can safely reuse pre-approved content across global markets.

- Human-AI partnership: Writers and reviewers spend more time refining insights, not fixing AI mistakes.

A report by IQVIA notes that pharma teams using fine-tuned, domain-specific AI models saw a 33% reduction in MLR cycle time—primarily by catching claim errors earlier (IQVIA, 2025).

👉 Want to make AI both faster and safer for your organization?

CI Life helps pharma teams fine-tune private AI models trained on your approved data, so you can scale compliant content with confidence. Schedule a consultation today.

The 5 Benefits of Fine-Tuned Generative AI

The 5 Benefits of Fine-Tuned Generative AI How to Build a Fine-Tuned Model Safely

Pharma organizations can start small—by fine-tuning AI for one product line or content category at a time.

- Use only approved data

- Feed the model content reviewed and approved by MLR.

- Exclude unverified external data unless validated by SMEs.

- Involve Compliance and IT early

- Choose vendors that meet HIPAA, GDPR, and 21 CFR Part 11 standards.

- Require model explainability and audit logs.

- Create a governance framework

- Define ownership, change controls, and validation schedules.

- Align model documentation with your Quality Management System (QMS).

- Continuously monitor outputs

- Test for hallucinations and unapproved phrasing.

- Track drift over time and retrain when accuracy drops.

- Integrate with your workflow

- Connect fine-tuned models to content hubs like Veeva or Salesforce Life Sciences Cloud.

- Pair with pre-screening tools for layered compliance checks.

Challenges to Watch

- Data prep takes time: Labeling and cleaning data for training can take months.

- Model drift: Outputs can shift if not retrained on updated data.

- Validation complexity: Fine-tuned models must meet internal and regulatory validation standards.

- Cost of scale: Training models on secure infrastructure requires investment, though cloud partnerships can reduce it.

According to Deloitte Life Sciences Insights 2025, pharma companies that start with small, validated AI pilots before scaling see ROI up to 4x higher than those that skip governance (Deloitte, 2025).

Want to measure how AI drives real value in pharma?

Read our CI Life blog Measuring AI ROI in Pharma: Frameworks, Metrics, and Case Studies. It explores how life sciences teams track the business impact of AI projects—from time saved to compliance costs reduced.

Why This Matters for the Future of Pharma

Fine-tuned AI isn’t just smarter—it’s safer. It understands approved language, knows what it can and can’t say, and keeps sensitive data where it belongs. As regulators look closer at AI use in pharma, compliant, domain-specific models will become the industry standard.

Companies that start building this foundation now will:

- Cut review and content approval times

- Reduce compliance risks

- Maintain patient and regulator trust

- Prove measurable ROI from AI investments

👉 Ready to bring compliant, fine-tuned AI into your pharma workflows?

CI Life specializes in helping life sciences organizations design secure AI frameworks, build claims libraries, and fine-tune models for accuracy and compliance. Talk with our experts today to learn how to start safely.

Gradial

Gradial  PEGA

PEGA