The AI Audit Checklist: What to Review, When, and Why

Aug 12, 2025 | 4 min read

Why AI Auditing Matters

In regulated sectors like finance, cryptocurrency, and pharmaceuticals, AI is now integral to core processes – from fraud detection and risk analysis to drug development and clinical predictions. But this growing reliance brings significant risk. If your AI system makes an unethical or non-compliant decision, the damage can range from fines and lawsuits to patient harm or public scandal.

To avoid these pitfalls, organizations must treat AI auditing as a regular, proactive process – not a one-time exercise. Audits help verify that AI systems align with regulations, function as intended, and are not biased, insecure, or opaque. This checklist outlines what to review, when, and who’s responsible.

What to Audit

1. Training Data & Data Governance

- Confirm data is high-quality, legally sourced, and properly labeled.

- Screen for bias and ensure data represents all relevant populations.

- Audit consent records, privacy protections, and data lineage.

- Frequency: Before model deployment and whenever data sources change.

- Why: Biased or misclassified data undermines the model and can create legal liability, especially in regulated domains.

2. Model Fairness & Bias Testing

- Run fairness tests on outputs across different demographics.

- Validate performance metrics (accuracy, recall, etc.).

- Record steps taken to reduce bias and ensure transparency.

- Frequency: At deployment and at least annually, or more often for high-risk models.

- Why: Regulators expect AI to be non-discriminatory and explainable – a requirement in finance (e.g. SR 11-7) and health.

3. Decision Outputs & Explainability

- Spot-check decisions and predictions.

- Use explainability tools (e.g. SHAP, LIME) to assess how decisions were made.

- Monitor for anomalies and flag unexpected trends.

- Frequency: Continuous monitoring plus periodic reviews.

- Why: Many regulations demand a "right to explanation." If you can’t explain an AI decision, it’s a red flag.

4. Security, Privacy & Access

- Audit who can access sensitive data, retrain models, or change system parameters.

- Check encryption standards and privacy protocols.

- Maintain detailed access logs and user roles.

- Frequency: Quarterly or during staff changes/system updates.

- Why: Unauthorized access or leaks (e.g. of patient or financial data) are a major compliance risk.

5. Documentation & Governance

- Keep model cards, data sheets, change logs, and risk assessments.

- Maintain an up-to-date inventory of all AI systems and versions.

- Record ethical reviews and committee decisions.

- Frequency: Continuously updated, reviewed before audits.

- Why: Missing documentation is a top audit failure point.

6. Human Oversight & Accountability

- Ensure humans are involved in critical decisions.

- Define who owns each model and who signs off on outputs.

- Log all human reviews or interventions.

- Frequency: Ongoing with periodic audit sampling.

- Why: AI isn’t a scapegoat – people are still responsible. Oversight prevents automation without accountability.

7. Third-Party Tools & APIs

- Review external models/tools used in your workflows.

- Require transparency and audit artifacts from vendors.

- Include terms in contracts around compliance.

- Frequency: Before onboarding, then annually.

- Why: You’re accountable for third-party tools, too – even if you didn’t build them.

Having trouble auditing AI in your business? Schedule an AI Strategy Workshop with CI Digital to learn more about remaining compliant with AI.

When to Audit

- Pre-Deployment: Before launch or retraining with new data.

- Regular Cadence: Annual full audits for most models; quarterly or semiannual for high-risk ones.

- Continuous Monitoring: Real-time alerts for drift, anomalies, or accuracy drops.

- Event-Based: After incidents, major updates, or regulatory changes.

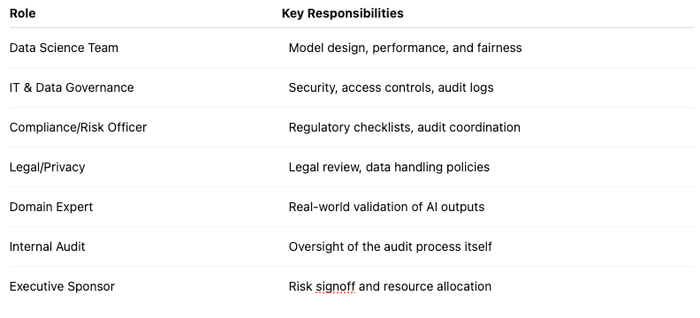

Roles & Responsibilities

Sector-Specific Considerations

Finance

- Follow SR 11-7 for model validation.

- Fair lending laws require bias testing in credit models.

- Document human overrides and explainability.

Crypto

- AML/KYC compliance is crucial.

- Ensure audit trails for transactions flagged by AI.

- Validate that trading bots or fraud AI are secure and non-manipulative.

Pharma/Healthcare

- Align with FDA’s Good Machine Learning Practices.

- Track model performance continuously (algorithmovigilance).

- Ensure compliance with HIPAA and clinical safety standards.

Having trouble auditing AI in your business? Schedule an AI Strategy Workshop with CI Digital to learn more about remaining compliant with AI.

Conclusion: Stay Audit-Ready, Stay Compliant

As AI becomes central to operations, your compliance readiness must evolve with it. Auditing AI isn’t just about checking boxes – it’s about building safer, fairer, and more effective systems. The right audit process doesn’t slow you down; it speeds up your ability to scale AI confidently.

Start with this checklist. Build it into your governance lifecycle. Own every piece of your AI – from the training data to the outputs and everything in between.

Sources: AI Compliance Audit Checklist (VKTR), DEV Community AI Checklist 2025, Wolters Kluwer, FDA AI Draft Guidance, EU AI Act Brief, SR 11-7, MiCA Regulation, HIPAA Guidance, and GMLP resources.

Gradial

Gradial  PEGA

PEGA